Air+Touch

Air+Touch is a class of new interactions that interweave touch events with in-air gestures, offering a unified input modality with expressiveness greater than each input modality alone. This work demonstrates how air and touch are highly complementary: touch is used to designate targets and segment in-air gestures, while in-air gestures add expressivity to touch events.

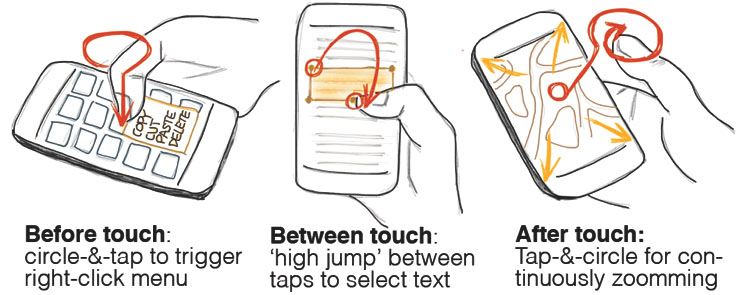

For example, a user can draw a circle in the air and tap to trigger a context menu, do a finger 'high jump' between two touches to select a region of text, or drag and in-air ‘pigtail’ to copy text to the clipboard. To illustrate the potential of our approach, we built four applications that showcase seven exemplar Air+Touch interactions we created.

Building Air+Touch was a lot of fun; I led the technical implementation and project logistics (development of finger tracking algorithm & API using depth-sensing camera), while Anthony focused on demo implementation, design, and paper writing. One of my most positive experiences collaborating with another student.