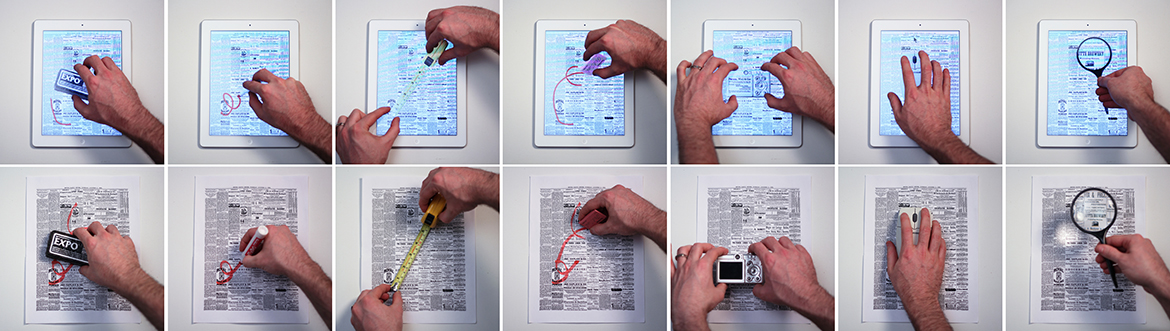

TouchTools: Leveraging Familiarity and Skill with Physical Tools to Augment Touch Interaction

TouchTools is a gesture design language and recognition engine that invokes tools or commands by mimicking how we grab tools in the real world. It is a tiny step into the future of interaction design as envisioned by Bret Victor.

A second contribution of TouchTools is the recognition algorithm. Rather than classifying on touch down, we wait 50ms after an initial touch to allow all touches to hit the screen, then compute rotation-invariant features on this set of points, and feed these into a support vector machine (SVM). Details are available in the paper.

To ensure credit is given where credit is due, much of the code for TouchTools was developed by Robert Xiao. This work was very much a collaboration and I'm honored to have been a part of it.

TouchTools is a Qeexo technology. To see a demo of this work, please contact info@qeexo.com. Demos of TouchTools are available for both iOS and Android.